Tl;dr:

- start with AI autogeneration and have the Agent spawn existing test cases

- prompt the Agent to create specific tests

- use the Recorder instead the AI Agent as a fallback option or to collaborate with the Agent

- use

dependencies - help the AI agent to nudge it in the right direction when steps fail to generate

- use

run locallyif preferred - work on multiple test cases in parallel

Start with the AI autogeneration

Our AI autogeneration gives you a head start with up to 3 AI autogenerated tests. You can have the AI agent generate more test cases from existing ones. Go into the test case and hover over thegenerate more icon - a button will appear. Click on it. Find more on how generate more works here.

The AI Agent will autogenerate up to 3 new tests based on the one you started it from. The original test will be added as dependency. We will validate every generated test case.

At sign-up, we automatically auto-generate close cookie banner and “required” login test cases. These are often the necessary prerequisites for all other tests. Learn more.

Create tests by prompting the Agent in natural language

This comes in handy when you want a specific test the AI agent didn’t find during autogeneration. There are multiple ways to start with a prompt. Use thegenerate test case button on the overview page or on the test cases page.

Record test step yourself

If you are familiar with test recorders, you might prefer taking over from the Agent and record additional test steps or record tests from scratch using our new test recorder. Here is how you can use the Recorder in the Octomind app.Use dependencies

Best practice is to keep the test cases short and to the point. Ideally, each test case represents a small task you want to achieve. What if you want to test if a full user flow is working? A good method for this is test chaining - the use of dependencies. Take accept all cookies, login and open profile page. Every test case runs in isolation and therefore needs to include the full flow. Open profile page would have to include steps for closing cookie banner and logging in. Unless you chain the tests together - make accept all cookies a dependency of login and make login a dependency of open profile page. This way the test runner as well as the AI Agent are using pre-play to execute dependencies before starting their actual work.

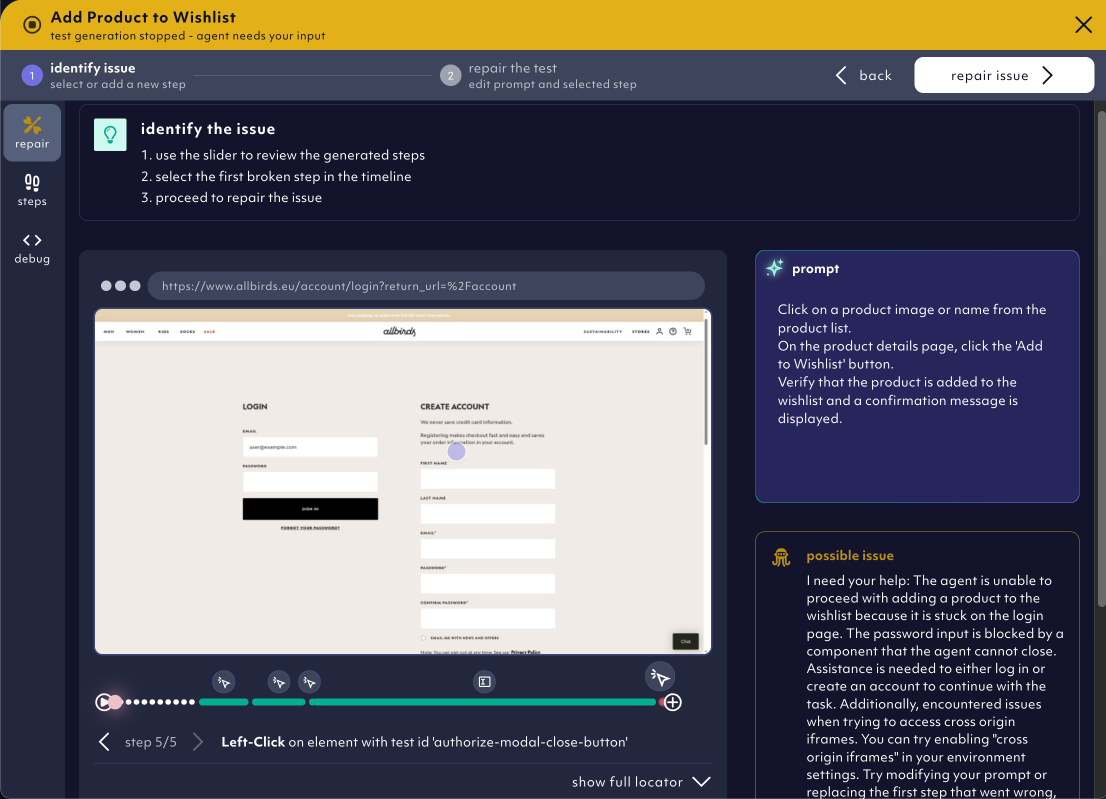

Help the AI agent when it went wrong

We are constantly improving the AI agent but it still might make mistakes. Help it find its way. If the AI agent signals a failed step with a yellow alert, click onrepair test to help the Agent finish the generation successfully.

Follow the instruction of the agent to repair the test.

steps tab of every test case detail.

Run Octomind tests locally

Run locally is most helpful for step by step adjustments since it is giving you the current state of a page. It will noticeably speed up your test creation efforts.

You can find the run locally button in the debug tab of your test case. Find out how to run Octomind tests locally.