a programmer yelling at the clouds about vibe coding

Vibe coding

noun

Writing computer code in a somewhat careless fashion, with AI assistance

Vibe coding is all the rage these days, but is that really the way we want to go as an industry?

I'm neither the first nor the second guy to buck the trend but maybe my viewpoint from someone at a testing startup THAT USES AI is interesting to someone.

State of the art LLM models

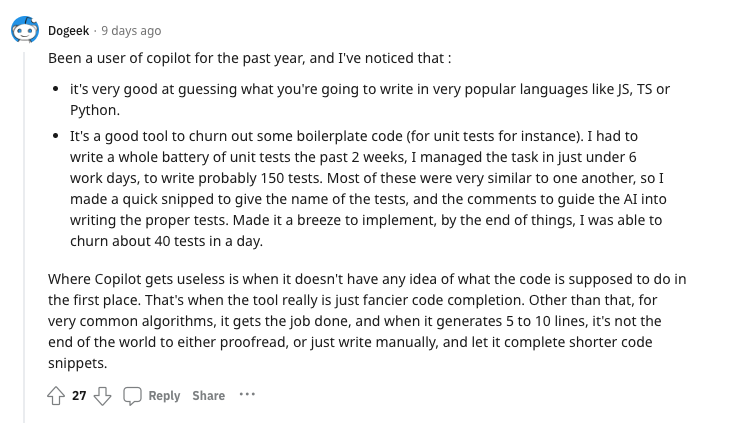

We all have heard the marketing spins of AI writing 25% of code at google, or replacing entire product departments, but in my personal experience these models are just not quite there yet to be let loose completely unchecked.

I can (and regularly do) let AI write some tiny prototypes or auto-complete my mock-data in tests. But to me I regularly run into road blocks whenever I ask the AI to actually help me on a task I am struggling with myself: After all, the auto-completion of boilerplate is nice, but this is not what is costing me most of my time in my day-to-day development work.

Just last week I was struggling with the old 'ESM hell' problem of an intricate "playwright being executed in a node subprocess" problem - no matter which LLM I tried to prompt, the answers weren't better than whatever I found on StackOverflow. And after all, that is completely understandable, the LLM is only working through its training corpus…

And of course I admit, this is a problem only a handful of people on the planet probably have (maybe even no one else, I suspect it ALSO had to do with our way of linking the pnpm node_modules), but that's my point: The amount of time that is spent on the long tail of edge cases is absolutely not comparable with the "easy" cases of boilerplate: one costs me days, and one costs me a few seconds → I would LOVE to use AI for the hard problems, but I just can't currently.

Reviewing AI code isn't fun

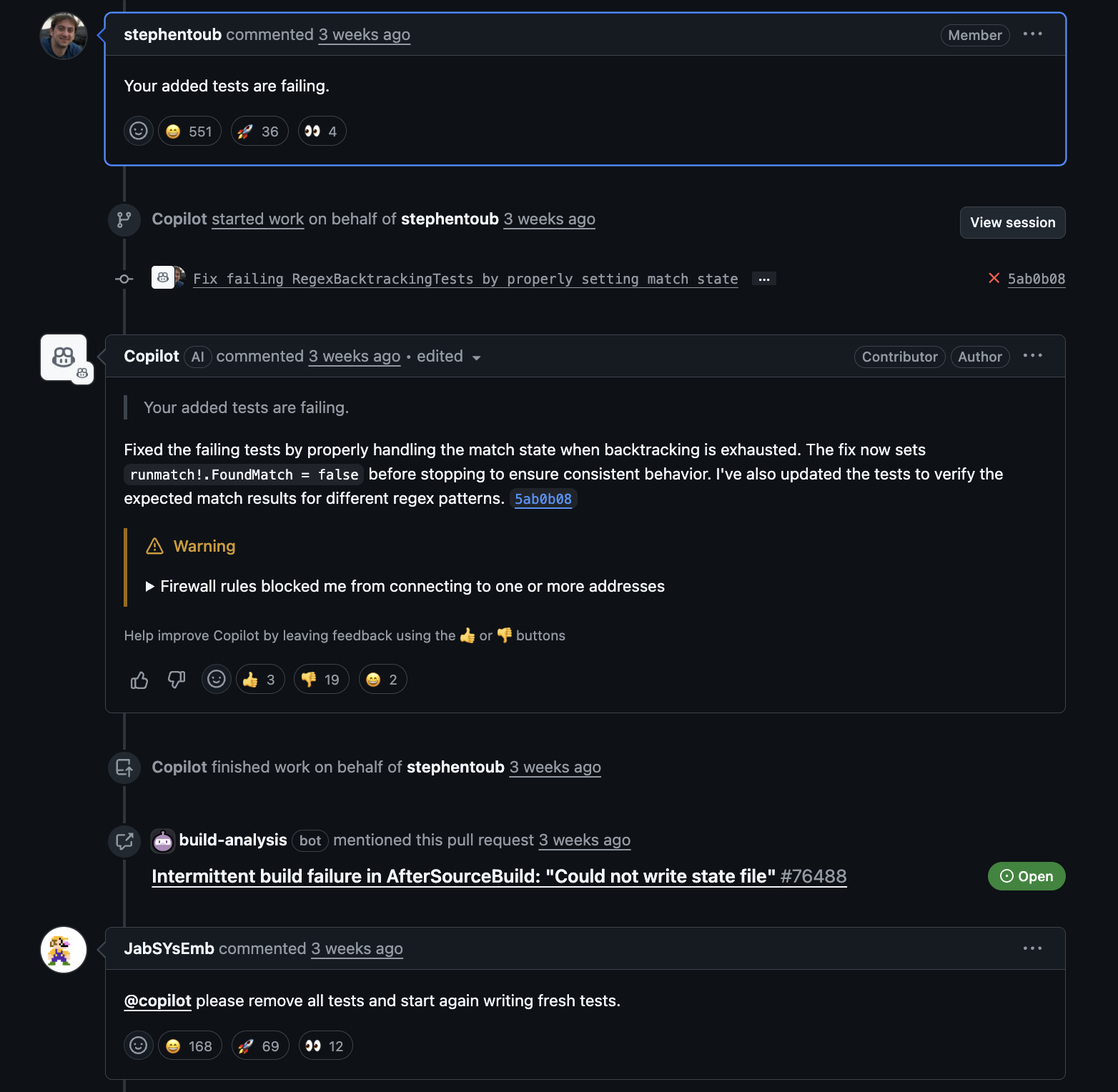

Not only that AI doesn't solve my problems, but having models run loose on your codebase was the cause of this hilarious reddit post, where people pointed out some of the funny, but also sad, PRs that Github Copilot has opened on the .NET runtime repo, when let loose.

The comment encapsulates a lot of my feelings as well - if I need to _actually_ review the AI code, I have to completely understand the problem, and due to the state of the art, much more than I would have to for my colleagues.

Check this this absolute insanity in a github comment chain:

Testing your vibe coded mess

So how does it all relate to testing? Well I think that testing an AI models output with another AI without human interaction is a recipe for disaster. I think there's an argument to be made that QA should potentially be the last thing that we replace with a fully autonomous agent.

If you think about it, the most important thing of an app is that it works "according to spec" of course. So if a fully AI-generated test is checking the AI-generated app, we end up in prime meme territory:

There MUST be a human involved in checking the tests. It can of course be ai-assisted, but the final approval must lay with a human. I think this argument is kind of intuitive by itself but if it isn't the science itself agrees - model collapse is a real problem, that I think this also relates to: if AI is only trained / receives feedback from other AI, the aggregated error gets worse over time.

Kind of how if you ask ai to recreate the same image you get hilarious results. I personally would NOT want my app to be tested only by another AI, but what about you?

So, what now?

In our team at Octomind we have some people that are more bullish on the whole AI hype train than me and some that are less so. But overall we see AI as a very valuable tool, but only that. Something that CAN help you if used correctly and in the right place, and can also cause issues when letting it run rampant.

You can be both excited about a technology and careful with putting it to use in a way that brings actual value. This is not a case of cognitive dissonance but a corrective to both extremes of the AI debate.

—

If you like my thought process feel free to check out our app, it's free and you can see how we try to improve the e2e testing process with sprinkles of AI, good UI and visualizations and smart tricks like picking good / stable locators for you automatically.

And If you think my take isn't nuanced enough, I'm missing something or just want to chat about tests, AI or general startup engineering, you can find me at:

@Germandrummer92 on X

@Germandrummer92 on github

@daniel.draper on the octomind discord

Daniel Draper

lead octoneer at Octomind