keep your Copilot and your code quality

Degrading code quality when using AI coding assistants will undermine delivery speed and neutralize the time they save. How do we get quality back on track?

AI generated issues with code quality

Studies on GitHub Copilot's impact on coding trends reveal a paradox: while it boosts coding speed it also introduces problems with code quality and maintainability. The latest study ' Coding on Copilot: 2023 Data Suggests Downward Pressure on Code Quality' by GitClear confirms the pattern. According to GitHub's own study, developers write code "55% faster" when using Copilot, but there appears to be a decline in code quality and maintainability of the code generated by AI.

Analyzing over 153 million changed lines of code, the GitClear study predicts doubled code churn in 2024 compared to 2021 and a dip in effective code reuse. For more insights resulting from the study, check out this summary written by David Ramel or watch Theo Browne's reaction to it.

These findings raise important questions about the long-term impacts of AI tools in software development. Poor code leads to app failures, revenue loss, and skyrocketing cost to fix the mess.

AI testing and refactoring to course correct AI generated code

How do we get quality back on track while keeping the productivity benefits of tools like Copilot? After all, degrading quality will undermine delivery speed and neutralize some of the gains, if not most.

AI might be the answer here, too.

First off, we need to push AI models to churn out code that meets quality standards. This relies heavily on Copilot-type tool providers stepping up their game. It's reasonable to assume they'll get better at solving it. However, it's not a silver bullet.

Second, new tools will make quality control, debugging and refactoring as easy as AI code creation. Startups tackling non-trivial developer problems around code quality mushroomed in the past 2 years. Exponentially so since the launch of GPT4. With decreasing code quality, they will become a necessity. But they will take time to learn and use which again, offsets the time you saved using AI generated code.

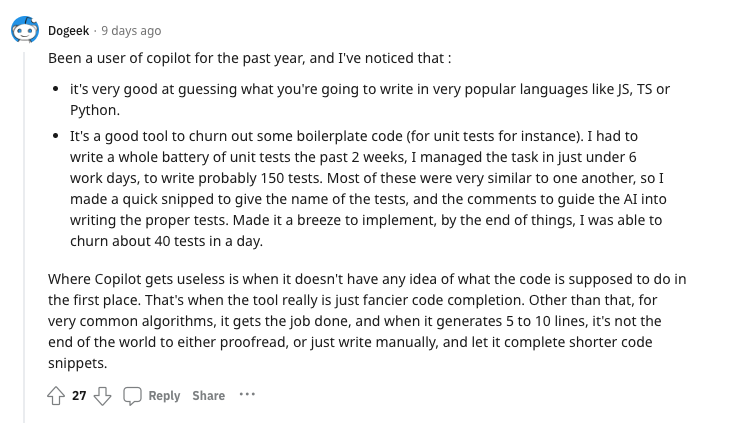

A third addition will be the generation of test code at all levels. Copilot itself seems to be decent in suggesting helpful unit tests already. But it won't be enough.

The case for AI in UI testing

Having AI taking care of end-to-end tests is a more complex task to solve. It is also essential not only to produce code fast but to also get it deployed fast. The speed gains from code generation won't materialize otherwise.

Direct test code generation is still not there yet, as many evaluations seem to point out, like this analysis of gpt 3.5 and gpt4 capability of handling e2e (Cypress & Playwright) tests.

But what if we approached it differently and used AI for its commonsense knowledge? Think UI tests as functional e2e tests for front-end. They test for functionality based on user interactions. AI can have a look at an application and interact with it as prompted. This way AI doesn't mimic the coder but the user. LLMs combined with vision models show a promising route to handle the problem.

AI testing and trust

The biggest challenge with any test suite and especially with end-to-end tests, is trust. If they don't provide enough coverage, they are not trustworthy. The same applies if they are flaky. Moreover, they need to run reasonably fast because otherwise, it is a pain to use them. Adding AI to the equation introduces even more reliability concerns.

The AI needs to provide high enough coverage by producing test cases of high quality and low flakiness which are running fast enough for a short feedback cycle. It has to be able to auto-fix broken tests to achieve meaningful coverage and keep it long term.

To counteract the diminishing trust into AI generated code, an efficient and trustworthy AI test strategy can go a long way.

That's what we are working on.

We are out with Octomind's beta version but we need your feedback to nail an AI testing tool you will trust and love to use.

Daniel Roedler

Co-founder and CTO / CPO at Octomind